- Aug 18, 2009

- 4,229

- 17,495

- AFL Club

- Richmond

- Thread starter

- #626

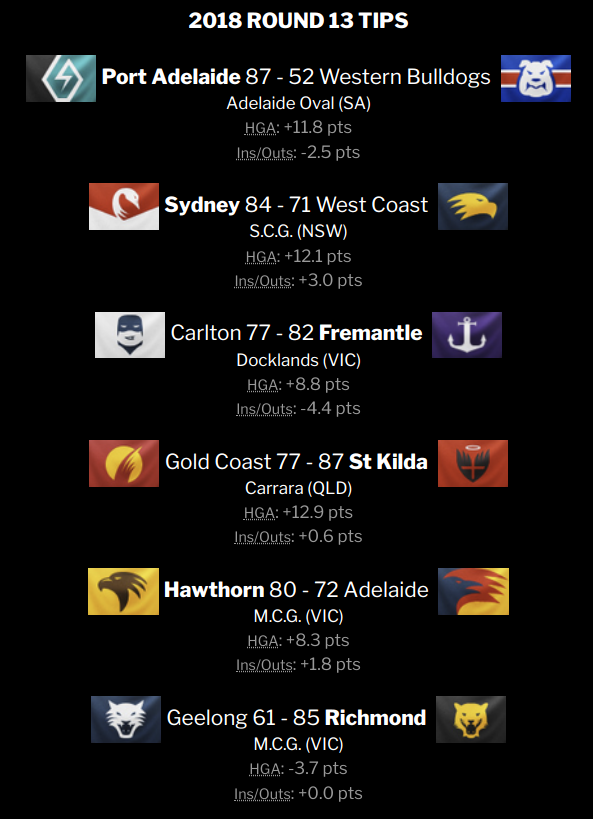

Yes, I agree with this. If you take 94 points out of any team this year, it'll hurt their chart position quite a lot.That chart suggests that we'd be moving that way

If my eyeballing is correct, since the hypothetical loss to Brisbane, we've gained 10 defence points, which is more than half way to our current position. A loss in that game is almost as much of an outlier as the win we ended up having.

The funny thing about the Brisbane game is that the next round, Richmond defeated Melbourne by 48 and moved dead right again. So the only thing Squiggle saw wrong about Richmond's position was that it wasn't far right enough.

Anyway, Richmond's current position isn't due to just one thing. They entered the season at the head of the pack and have delivered very good results, with lots of thumping wins plus acceptably honorable losses away interstate to other strong teams. It's just a solid raft of numbers.

The other teams that started with good 2017 track records (Sydney, Geelong, Port Adelaide, Adelaide, GWS) have been inconsistent or outright poor, while teams that have performed strongly (West Coast, North Melbourne, Melbourne, Collingwood) started from much further back. It's not common for a team to rise from the lower half of the pack to flag contention in a single year, so they have a bit more to prove.